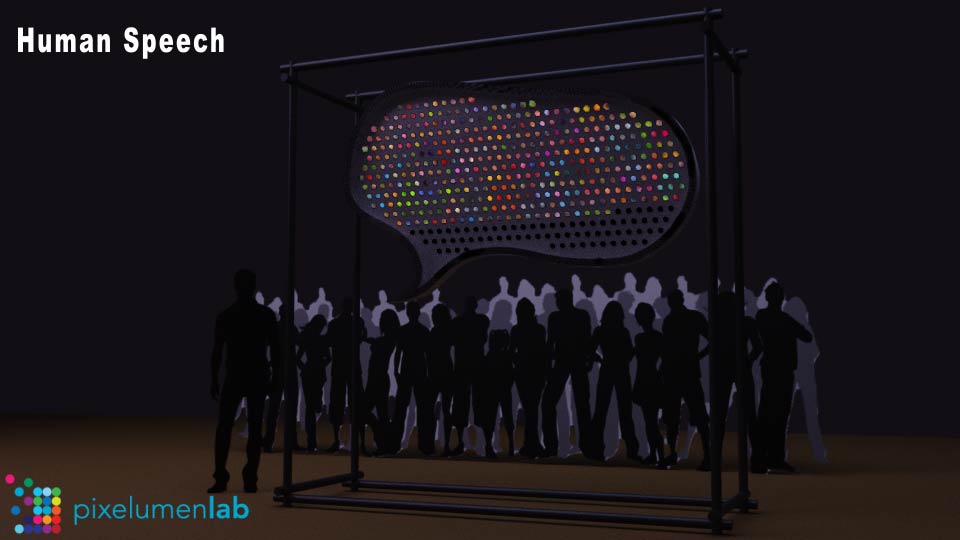

HUMAN SPEECH

A proposed new lighting installation.

We are proposing a new artwork installation that asks the following questions: What do the sounds of voices look like as light? What color comes from certain sounds or pitches? If there are enough voices, do the blended sounds become homogeneous, thus making the light homogeneous? If light were a sound, what would a particular color sound like? Could we, as humans, decipher those sounds as language?

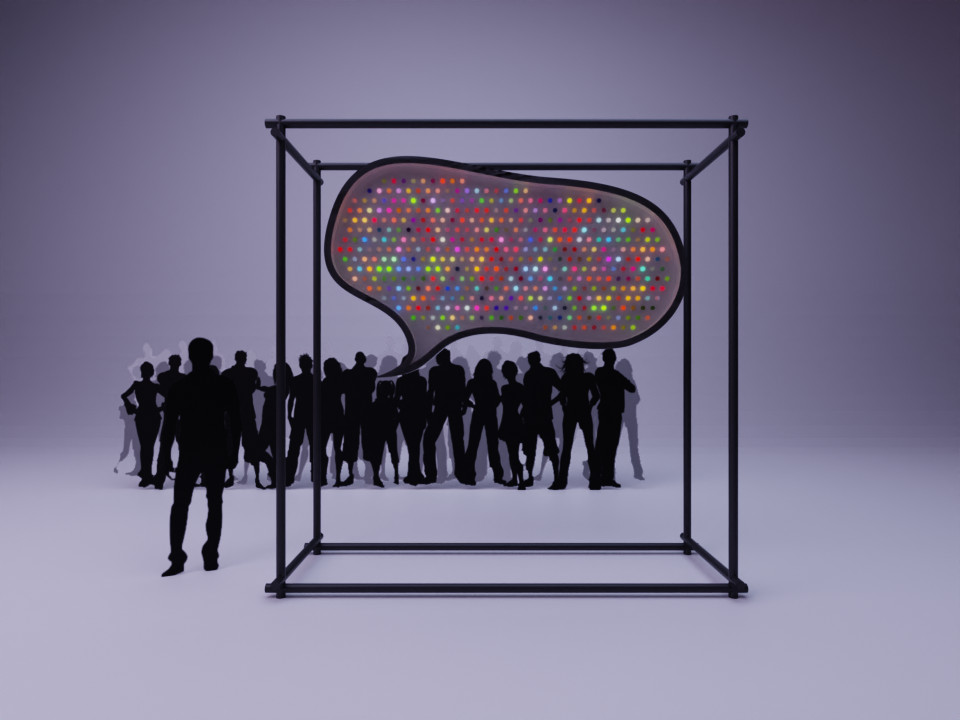

Our proposed artwork is a new installation that is about capturing the atmosphere and ambience of the festival’s attendees. In particular, the sounds of the attendees’ speech and their movement, and translating that sound into light. After the sound has been translated into light, then the light will be translated into sound.

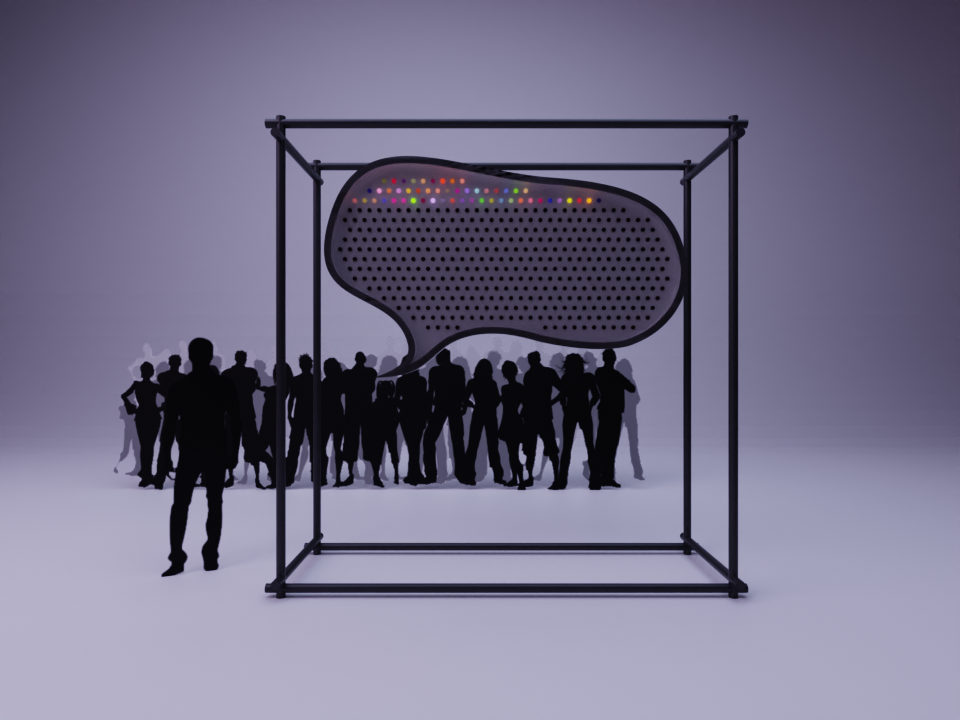

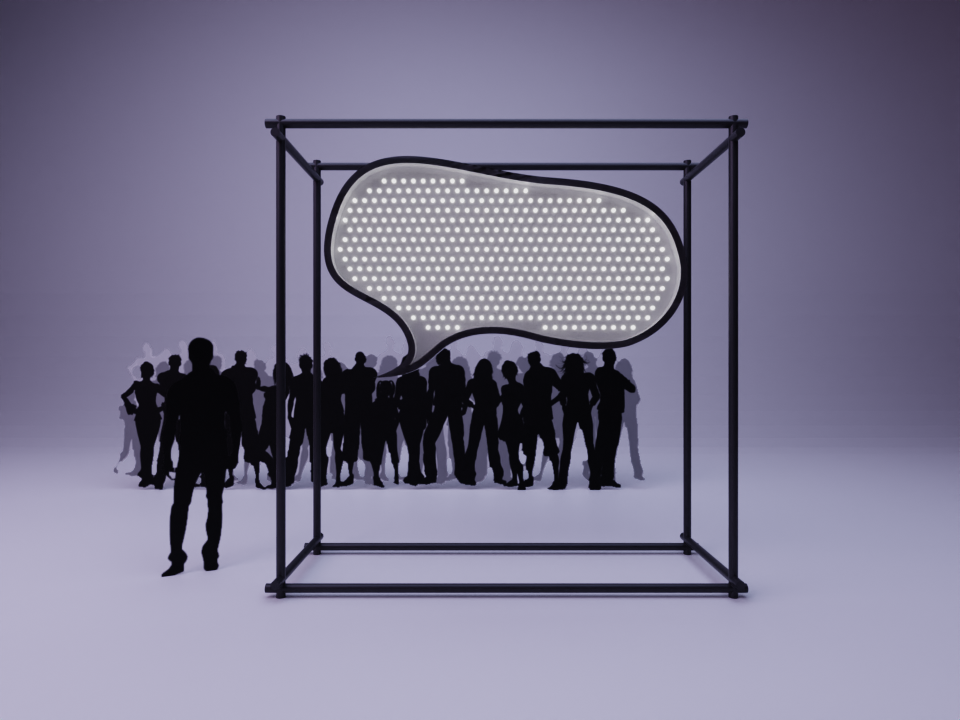

Visually, our sculpture will resemble a speech bubble, like the kind seen in many comics and graphic novels. The speech bubble, suspended in a free-standing structure, can be set up wherever there’s foot traffic. The installation will utilize microphones to capture the sounds of the crowd and instantly process that auditory data into light. Using customized software, the auditory data will be analyzed for pitch and frequency. The sound data will be interpolated into color (red, green, blue, and white) values using the 0-255 decimal system. Using a DMX interface, we will be able to show color as light using these values. After the software translates the incoming sounds into color, the color value will then in turn be translated into a musical note and played back as sound.

The sculpture will comprise approximately 400 individual LED nodes inside a sculptural shape of a speech bubble that is approximately 2.9m wide by 1.5m high. The speech bubble will be suspended from a free-standing structure with an overall proposed footprint of 3.4m wide by 3.4m high by 1m deep. Our primary objective is to scan all sounds of human speech in the crowds that pass by the installation, translate that information into color and light, and then translate again the color data back into sound.

We have a working prototype of the software that converts sounds to light and light to sound. Given time and resources, we also want to explore adding in and using A.I. software to do a more nuanced analysis of the sounds of the crowd. For instance, how often would festival attendees passing by the installation use the word “LIGHT,” and in how many languages? Can we decipher this word, across multiple languages, and trigger a particular look in our installation each time the word is recognized?